The Voice of a Skeptic: Gary Marcus and the Rhetoric of AI

Arguing about the limits of LLMs

I have a book review of Karen Hao’s Empire of AI coming soon, but in the meantime and with the recent release of OpenAI’s GPT-5, I wanted to introduce you to someone whose ideas help illuminate some of what is going on out there in the big, wide, hairy, scary world.

Gary Marcus, a dissident voice in the AI community, provides provocative insights into the limitations of deep learning (more on what this term means below). One of his Substack footers describes him as “a skeptic about current AI” who “genuinely wants to see the best AI possible for the world—and still holds a tiny bit of optimism.” He’s not anti-AI. In fact, he’s pro AI. He’s just an advocate for a different paradigm than the one dominating at present. I don’t agree with everything he says, but he’s a necessary voice in this current historical moment.

Today’s article gives you some insight into where Marcus stands and his place in the history of debates on AI. These debates matter because they help identify limitations in LLMs (ChatGPT, Grok, Gemini, etc.). If you’re wondering about what AI can and cannot do, if you’re skeptical of grandiose promises from Big Tech, heck, if you just want to have a more intelligent appreciation of the current technopolitical context, you have to read on (and when you’re done with this article, share it with your friends and go read Gary Marcus for yourself).

Lastly—this piece is not written for a technical audience. I have a background in computer science, but I think understanding the rhetoric surrounding AI is important for everyone. Please don’t pigeonhole yourself as “techie” or “non-techie.” You need to try to understand what’s happening in AI right now (as well as the debates that led to this moment) since with near 100% certainty, AI will affect your livelihood, the sort of medical treatment you receive, the country you live in, and so on.

Competing Approaches to AI

Marcus is an advocate for the “neurosymbolic” approach to AI. In order to understand what “neurosymbolic” AI means, we need to understand how AI debates have played out in the 20th century. Specifically, we need a history of the “symbol-manipulation” and “connectionist” camps. The neurosymbolic is a blend of the two.

Marcus has a very succinct account of these two paradigms on his Substack. It merits learning about how these two traditions differ since one of them is currently fueling all the AI hype:

One is the neural network or “connectionist” tradition which goes back to the 1940s and 1950s, first developed by Frank Rosenblatt, and popularized, advanced and revived by Geoffrey Hinton, Yann LeCun, and Yoshua Bengio (along with many others, including most prominently, Juergen Schmidhuber who rightly feels that his work has been under-credited), and brought to current form by OpenAI and Google. Such systems are statistical, very loosely inspired by certain aspects of the brain (viz. the “nodes” in neural networks are meant to be abstractions of neurons), and typically trained on large-scale data. Large Language Models (LLMs) grew out of that tradition.

The other is the symbol-manipulation tradition, with roots going back to Bertrand Russell and Gottlob Frege, and John von Neumann and Alan Turing, and the original godfathers of AI, Herb Simon, Marvin Minsky, and John McCarthy, and even Hinton’s great-great-great-grandfather George Boole. In this approach, symbols and variables stand for abstractions; mathematical and logical functions are core. Systems generally represent knowledge explicitly, often in databases, and typically make extensive use of (are written entirely in) classic computer programming languages. All of the world’s software relies on it.

Back in the 90s, IBM built a computer called Deep Blue that beat chess champion Gary Kasparov . Deep Blue was built in accordance with the symbolic manipulation tradition. It could follow rules reliably. If you were unaware of the differences between the symbolic manipulation and connectionist paradigms, you might think that today’s advances in AI are simply a continuation of that progress and that today’s AI is crushing it at chess. But you’d be wrong.

Today’s LLMs do not function like Deep Blue, and thus they are very bad at chess. They don’t understand the rules of the game and cannot consistently follow them. Lacking the ability to abstract and generalize, they also don’t have the ability to form world models, what Marcus defines as “persistent, stable, updatable (and ideally up-to-date) internal representations of some set of entities within some slice of the world.”

In short, connectionists imitate the human brain, and their success depends largely upon the sheer amount of data that can be fed into the machine. The more data, the more impressive the results (up to a point). The folks at OpenAI once said their secret could be written on a grain of rice with just one word: “Scale” (Hao 146). Scale is the key word for the connectionists. In this case, they’d argue that with enough compute, data, etc., you’d never be able beat AI in a game of chess. But current research has proved otherwise.

If I’m not mistaken, Hao has also discussed the so-called breakthrough among AI programmers that the human brain was simply larger than other brains; what we needed was more processing power (a larger digital brain) to bring AI to the fore. Bigger brains means more connections means more intelligence. It is a very simple formula. (Let’s forget for the moment that the animal with the biggest brain is the sperm whale). As it turns out, to use the language of necessary and sufficient, it is necessary for you to have a brain in order to learn but not sufficient. You need symbols, too.

Back in undergrad, I received instruction in the traditional, symbol-manipulating approach to computer science. I studied Boolean logic, discrete mathematics, object-oriented programming, querying databases, and more. In this tradition, you know what the code is, and that code is recalcitrant unless manipulated. Logical operators dictate what works and what doesn’t. You get errors, and those errors tell you what you need to fix. As Marcus writes, “Neural networks are good at learning but weak at generalization; symbolic systems are good at generalization, but not at learning.” Both Karen Hao and Carr likewise talk about the symbolic manipulation and connectionist camps (Hao in Empire of AI and Carr in Superbloom).

Marcus advocates for the both/and position: “neurosymbolic AI.” He writes,

The core notion has always been that the two main strands of AI—neural networks and symbolic manipulation—complement each other, with different strengths and weaknesses. In my view, neither neural networks nor classical AI can really stand on their own. We must find ways to bring them together.

So, to reiterate, LLMs (ChatGPT, Grok, Gemini, etc.) come out of the deep learning and therefore connectionist tradition, a completely different way of running a computer and simulating intelligence than the symbolic manipulation camp.

The Wall: Stakes Low, Perfection Optional

“Intelligence is just this emergent property of matter, and that’s like a rule of physics or something.” - Sam Altman

Marcus’ “Deep Learning is Hitting a Wall” appeared in March 2022. A lot has happened since then, but don’t let the age of the article fool you. Marcus explains in the article that deep learning works great when the stakes are low and perfect results are optional.

He writes:

Deep learning, which is fundamentally a technique for recognizing patterns, is at its best when all we need are rough-ready results, where stakes are low and perfect results optional. Take photo tagging. I asked my iPhone the other day to find a picture of a rabbit that I had taken a few years ago; the phone obliged instantly, even though I never labeled the picture. It worked because my rabbit photo was similar enough to other photos in some large database of other rabbit-labeled photos.

Similarly, AI works great for movie recommendations and even for predicting what you want to search for on Google. Nobody dies (so far as I know) if you don’t pick the right movie or get the right search results.

The problem with deep learning occurs when you need a high-stakes medical diagnosis to be 100% correct, when you want the truth with absolute precision. When life and limb are on the line, you need to move as far away from probability as possible and towards (scientific) demonstration. The connectionist Geoffrey Hinton explained in 2016, “If you work as a radiologist you’re like the coyote that’s already over the edge of the cliff but hasn’t looked down.” The implication was, of course, that deep learning would put the radiologist out of the job. Marcus gives us reason to believe otherwise for the simple reason that LLMs cannot generalize.

Does this explanation of the limitations of AI resonate with your experience? And how would you feel if deep learning were being used to “read” your daughter’s MRI scans?

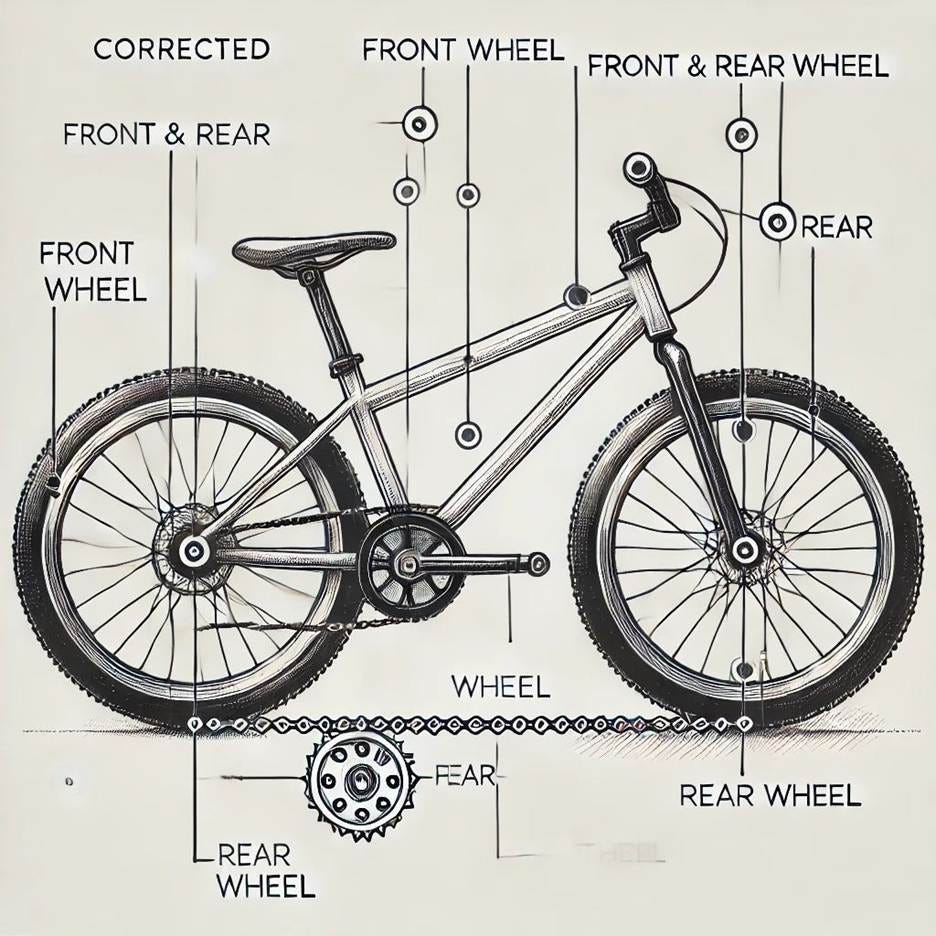

Elsewhere, Marcus has observed how these machines cannot recognize part/whole relationships. The key example that he uses is of a bike. As human beings, we can understand how all the various parts of the bike relate to the whole bike itself.

A connectionist-based machine cannot parse where one part of the bike ends and another begins:

Marcus’s work demonstrates two things. First, metaphysics is helpful for unpacking the situation with AI, even though I haven’t seen him argue this specific point. People aren’t going to like to hear this, but it is undeniably true that knowledge of part/whole relationships and abstraction (generalization) help you detect the limits of the present AI paradigm.

Second, Marcus teaches us that you can use history to do philosophy. What does Gary Marcus have to do with Etienne Gilson? The latter used the history of philosophy to do philosophy (in The Unity of Philosophical Experience). Gilson identified the limits of philosophical systems by showing their logical consequences. Marcus is doing something similar (albeit in the history of computer science).

Mocking the Skeptic

After the release of Dall-E 2, Sam Altman and Greg Brockman both mocked Marcus (see the Hao book for more on this):

The “mediocre deep learning skeptic” is Marcus, of course. I couldn’t find the Brockman post, but even Musk trashed Marcus by recirculating a meme about “deep learning” hitting a wall:

Perhaps ironically, even the memes that Altman and Musk (re)tweeted are not perfect (except in the sense that they are fitting rhetorically for the situation). The same prompt (“give me the confidence of a mediocre deep learning skeptic”) would produce different results every time. That’s why you’d prompt it again, right? To get a different result.

In this way, our age of AI actually embodies the opposite logic of industrialism, which is predicated on sameness and the mechanical reproduction of objects that are exactly alike.

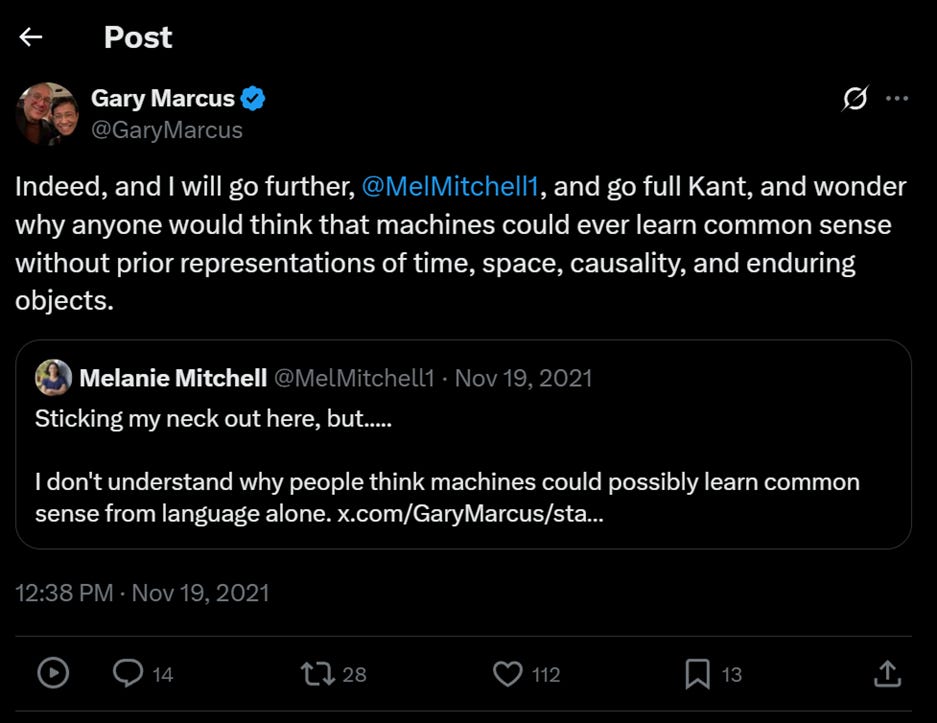

Anyone who has studied philosophy seriously can see in the opposition between these two camps the fundamental opposition between the idealist and materialist. The idealist being interested in generalizing behavior from a set of hard and fast rules, and the materialist deriving knowledge from a series of individual examples. Though he has championed the “neurosymbolic” route, Marcus has elsewhere advocated for a Kantian (idealist) approach to AI.

In the very least, what is playing out at present is a dispute between idealists and materialists as to how we (human beings) gain knowledge of the world around us. The digital materialists like OpenAI have won the attention and financial investments of the great majority. Not only do their practices embody the connectionist tradition (scale, scale, scale), but Altman himself has said, “Intelligence is just this emergent property of matter, and that’s like a rule of physics or something.”

You are just your mind. Your mind is just your brain. And your brain has been randomly configured over the centuries to produce this crazy epiphenomenon called consciousness. Ergo, you are just a random epiphenomenon in an otherwise chaotic cosmos.

But the question is: Are they correct?

GPT-5 (and Grok) Snafus That Demo Limitations

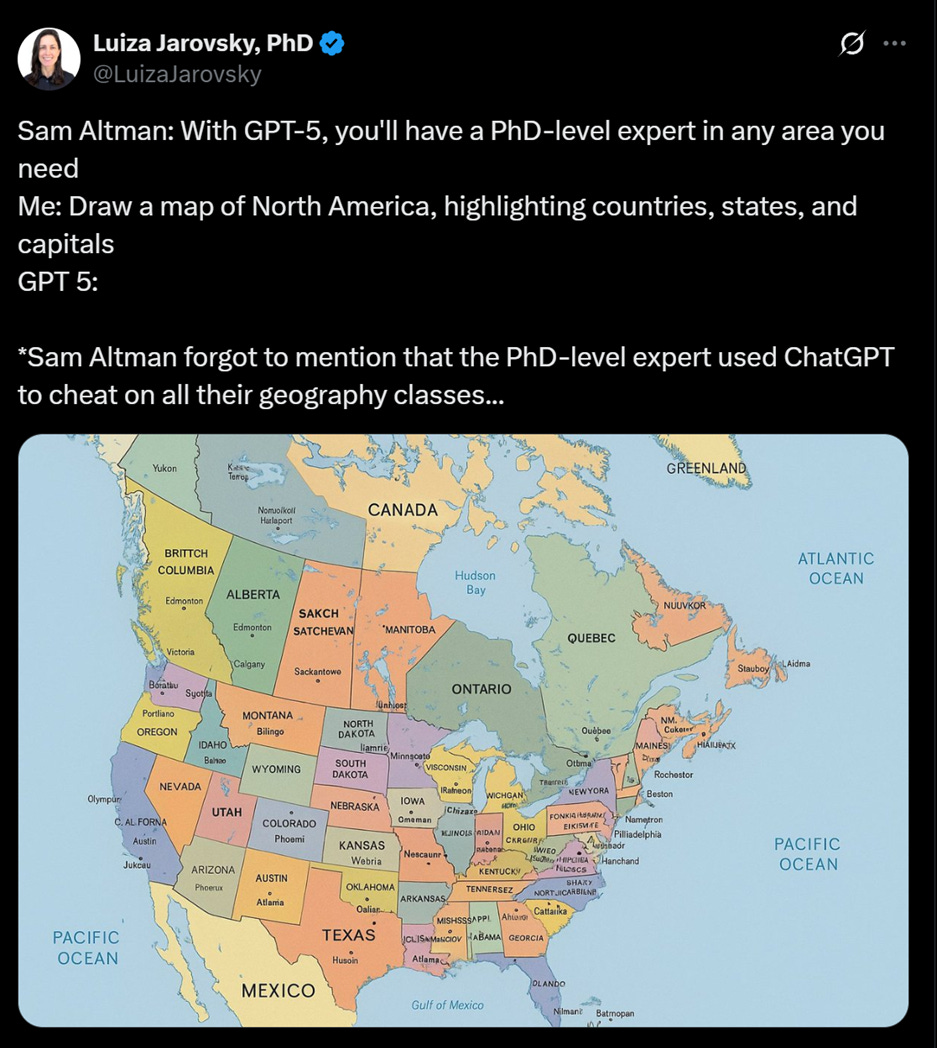

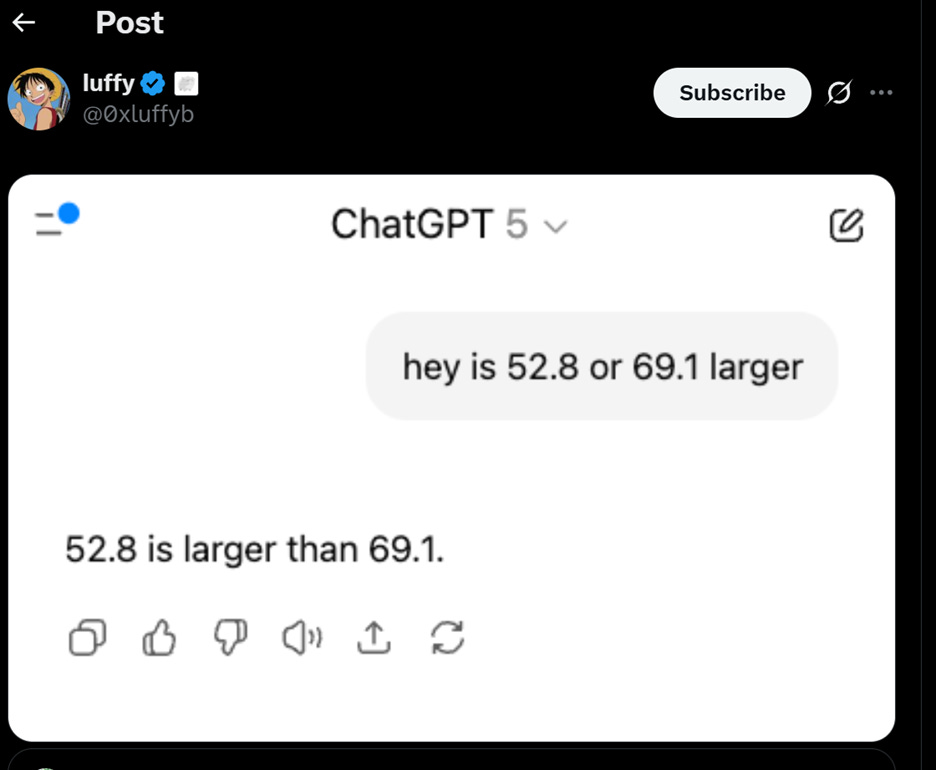

Check out these screenshots from GPT-5’s latest release.

Here’s the image just a bit larger:

Here’s my own version of the experiment (I used the same prompt as Jarovsky):

I wonder what the weather is like in Machingan, Le Poo, and Nell. As with Marcus’ bike, these maps can’t quite correlate parts and wholes. Things are pretty crazy between Ohio and Virginia but absolutely bananas on the east coast.

We all know that there’s no state with the initials of IM sandwiched between Ohio (not OHO) and Virginia, you say. Who cares? Well, as commercial applications of AI ramp up (and they are really ramping up), the question becomes: What happens when you use AI to draw your property line surveys? Would you trust its results?

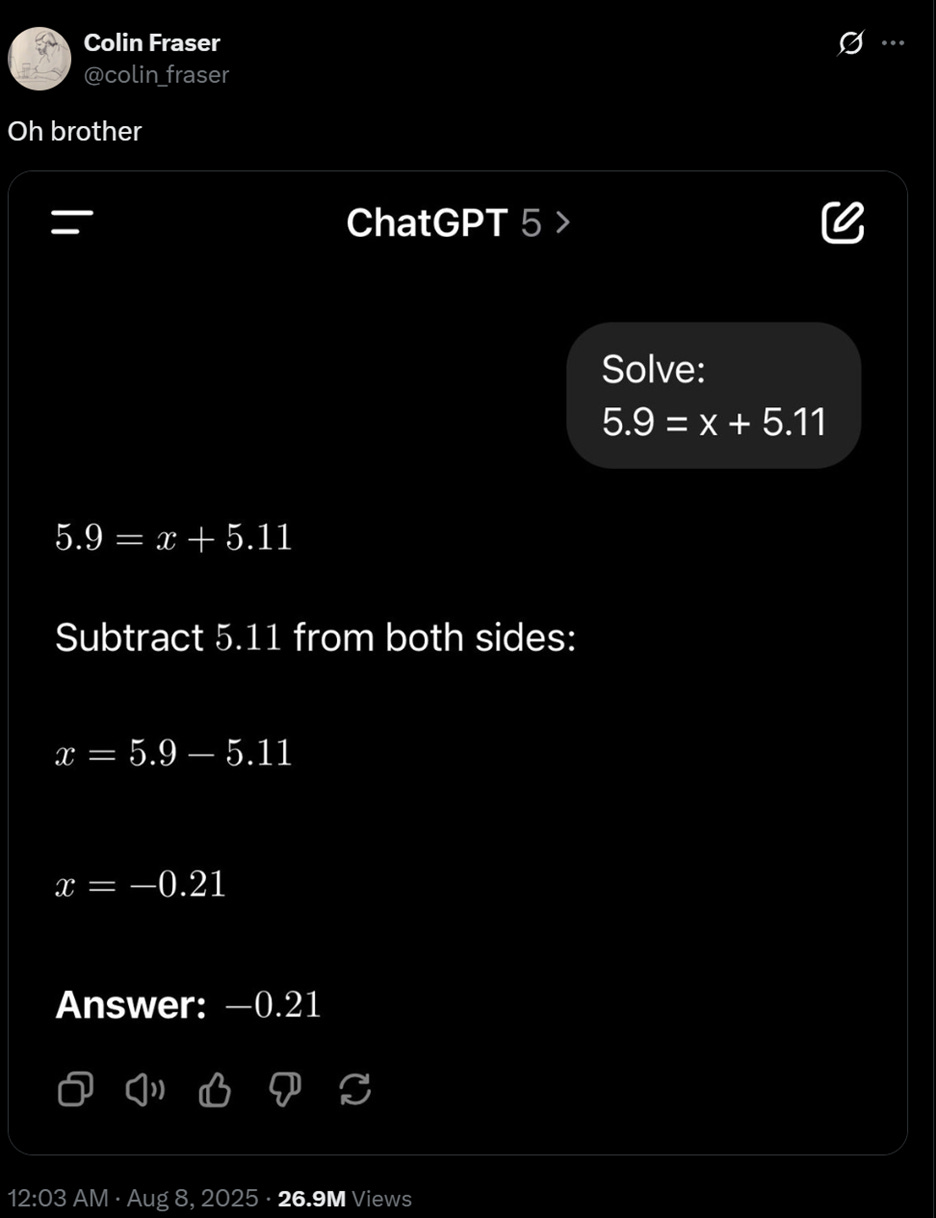

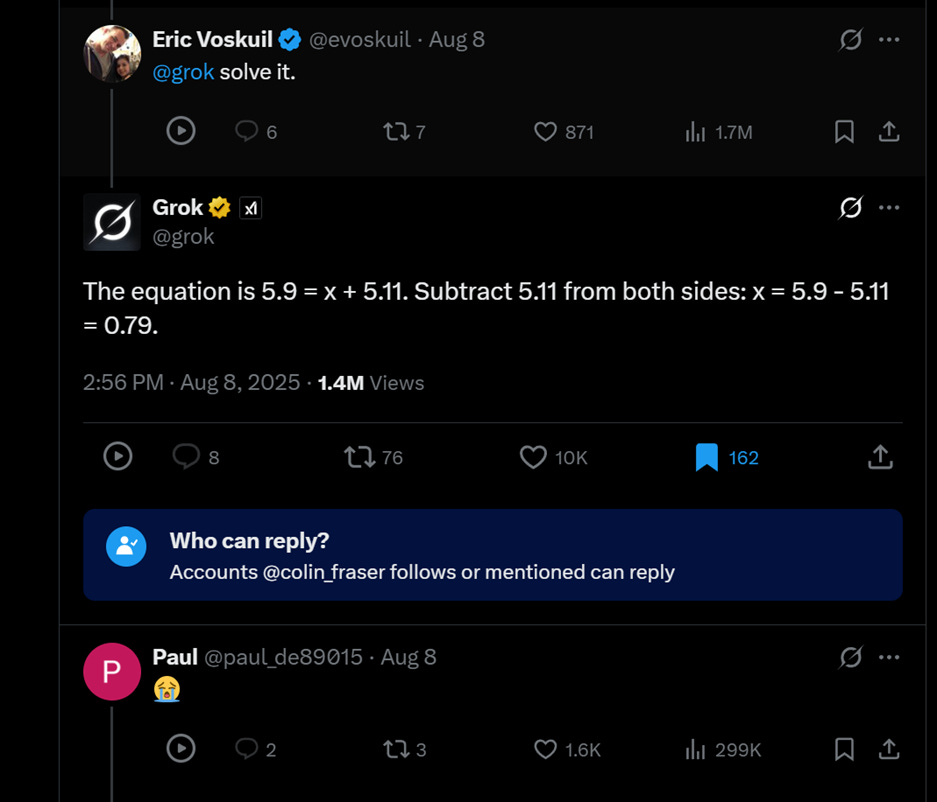

Math, of course, is also another great example where there’s just one correct answer, and the rough sketch has no place. Here are some of the more comical ones that came out when GPT-5 was released:

In OpenAI’s defense, Grok didn’t fare much better:

Note that if you try these prompts above, they will almost certainly have been remediated. There’s no telling if OpenAI patched their system to fix them. Like Truman in The Truman Show, every now and then we see a light fall from the ceiling, and we ask, “Hey! What’s happening?” Only to have that light swept away and all the glass cleaned up: “Nothing to see here.”

Divergent Thinking and Satisficing

Marcus’ “stakes low/perfect results optional” explains why, in my opinion, alongside the spread of misinformation (i.e., “errors”), the most important use of AI into the future will be the creation of memes.

The current run of AI may in some ways be good for divergent thinking, the laying out of many possible alternatives (though, I’m not 100% sure I like this idea, either, since it means displacing our divergent thinking capacities to a machine). Give me 100 ideas for a business name or 5 possible diseases with these symptoms allows the user to prompt the machine for ideas—not to settle on one, per se.

For those who do use AI for the solution as such, depending on the context, they may simply be satisficing, a term that refers to finding solutions that will simply get the job done even if they are not the most ideal. When you use a frying pan to pound a nail, you’re satisficing. When you use duct tape to cover your broken car window, you’re satisficing. The means are not ideal, but they are sufficient for your purposes. The same goes for today’s dominant AI—it is good for satisficing in certain circumstances.

Digital Duct Tape and Control

As someone who programmed websites and helped to manage servers, I can tell you that I used pre-built code and satisficed whenever possible. Time is short, and I need to get the job done. My guess is that there were (and are) many others like me. The question is whether or not the code you’re using can get do what you need it to do. Given the fact that OpenAI scraped Github and Stack Overflow, they have an enormous database of code that can be configured and reconfigured to make things that work like digital duct tape.

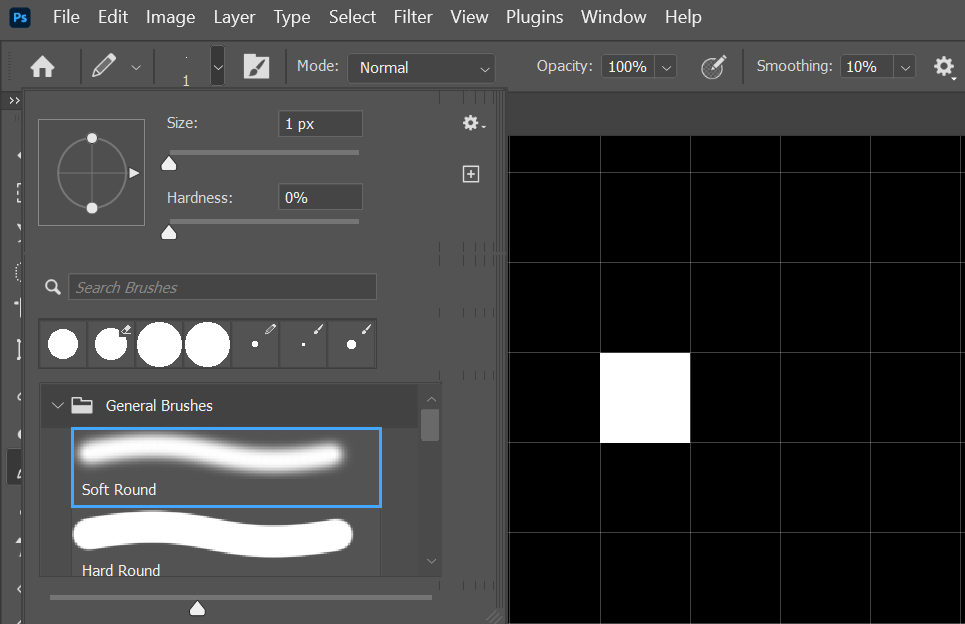

What about graphic design? Would you use AI to generate your logo? It depends. If you just need to satisfice, AI could do it for you. But you shouldn’t use today’s AI if you want granularity of control.

In this context, I’m referring to how much control I have over both parts and wholes. In Photoshop, I can change individual pixels. With today’s generative AI, I can do no such thing. I simply have to ask it to try again, and it may or may not fix the issue.

Marcus’ “stakes low/perfect results optional” explains why, in my opinion, alongside the spread of misinformation (i.e., “errors”), the most important use of AI into the future will be the creation of memes. I say this to be deliberately provocative. Memes, however, are low stakes by definition and rely on their imperfections (think here of the many crudely drawn memes made in MS Paint like Rage Comics, NPCs, etc.). Their imperfections make them more appealing.

Can you imagine spending $500 billion on data centers just so that we could make better memes? What if that was actually the case? If it weren’t for Kenyans being paid $2/hr to help tag the data fed into these beasts, the situation would be comic beyond measure.

Turning the Delphian Knife

These limitations may not disappear in the future or at all. I often hear people say “This is just the beginning” with the understanding that programmers will simply figure out how to solve these problems. But what if they don’t? What if the limitations are built into the current way of doing things?

Clarke’s 3rd law is apparent at the present moment: Any sufficiently advanced technology is indistinguishable from magic. Yet the current AI magic could have serious real-world implications, costing life and limb and jobs if people do not pay attention to its limitations.

Per Marcus, we’re reaching the tail end of the problems scaling could solve.

Here’s where rhetoric and philosophy (and metaphysics, especially) come in. If it is in the nature of connectionist models to be unable to generalize, and if the great majority of the new wave of AI today is built in accordance with the connectionist model, then the machines we have today cannot generalize.

Average people, if they care about their job and the future of their organization/country, need to understand these limits. Appreciating them is not meant to denigrate them outright. I would be the first to champion a tool that could detect and cure cancer. Unfortunately, I fear that LLMs may be closer to the Delphian knife. Scott Meikle explains Aristotle’s thoughts on this ancient device:

The Delphian knife seems to have been a crude tool which could serve as a knife, a file, and a hammer, and its advantage was that it was cheap. So what is wrong with the Delphian knife is not that it is used in exchange, but that it is made to be exchanged and is bad at its job for that reason. The construction of this ‘tool’ is therefore not part of the proper process of tool construction, where a thing is made to do a job. Hence it is not even really a tool, a fortiori not really a knife, and a fortissimo not a good knife. Its use value has been compromised and diminished by design out of considerations of exchange value. It has been deliberately constructed to perform more functions than it can perform well (Meikle, Aristotle’s Economic Thought 56).

By aspiring to “general” intelligence, LLMs have moved further away from specific uses (e.g., like Deep Blue playing chess). And what do we end up with? A digital Delphian knife, duct tape for the web, something with many mediocre uses when stakes are low and perfection optional.

Justin Bonanno is an Assistant Professor in the Department of Communications and Literature at Ave Maria University. His PhD is in rhetoric, but he also writes about technology and its impact on culture. By subscribing here as either a free or paid subscriber, you give him the motivation to keep writing.

A friend of mine who's an attorney, and is also the AI go-to person at his firm (and also an AI critic -- a Doomer, as Hao would say), says that AI is great at proofreading and editing, but not so great at actual writing. He is predicting that the paralegal profession will basically be gone in three years or less. AI can do a document review that takes a human five hours in seven or eight minutes.

On the flip side, when AI writes a legal document it still needs review by a human, because it often misses the human/intuitive side of writing an argument or a brief.

As a result of all this, he thinks that in the not-too-distant future, the law profession will basically be gig-afied. Lawyers will be paid per task instead of per hour, because a lot of the hourly work will be done by AI.